The Impact of the EU AI Act on Financial Services and AML

By Irene Madongo

The European Union’s Artificial Intelligence Act, which is designed to bring in benefits such as safeguards for consumers and boost innovation at firms, has implications for the financial services sector and anti-money laundering (AML) controls at companies using AI systems.

The second phase of Act kicked in on 2 August 2025 and brings in new responsibilities for compliance departments at various firms. Generally, the legislation has a risk-based approach when it comes to AI regulation in the EU, setting different levels of potential harms to be considered. It also enforces penalties, including as much as €35 million for issues such as non-compliance that are linked to data matters.

The requirements mean companies may have to review their operations, including their financial crime controls, to ensure they are in step with the rules.

Implications for AML controls

The EU AI Act applies to organisations within and outside the European Union whose AI system is in the Union market, or if it impacts people in the bloc. Companies that use AI for AML and other financial crime prevention purposes may need to check if the legislation applies to them, and ensure they comply if it does. It’s worth noting that some activities such as research that happen before an AI system is launched on the market are exempt from the regulations.

Benefits of implementing AI into AML systems

Companies may consider integrating AI into their AML systems because of its benefits such as speeding up processes and freeing up resources.

Evidence of impact is already emerging. PwC’s June compliance survey found that over two-thirds of British organisations expect AI to deliver a net positive effect by improving speed and reducing risk. The report also emphasises the importance for compliance teams to understand the potential harms attached to it, such as misinformation and cyber risks. HSBC reports similar results in practice. Group Head of Financial Crime Jennifer Calvery stated in June that AI has helped, “reduce the processing time required to analyse billions of transactions across millions of accounts from several weeks to a few days.”

Andrew Henderson, partner at Goodwin Law, noted that large language models (LLMs) can help compile KYC screening reports by processing data far faster than humans and surfacing anomalies. However, he stressed that human oversight remains essential: a money laundering reporting officer (MLRO) may have intuition that a machine might not, for example.

The EU AI Act and the financial services sector

The EU AI Act does not have a section dedicated to the financial services sector, but it is a general law that will affect firms in that industry, like the General Data Protection Regulation (GDPR). The AI Act, in effect, tasks specific financial services regulators in member states with overseeing the use of AI by the financial services firms they regulate, Henderson said.

The legislation does not deem the financial services sector to be high risk, but there are areas in the industry where AI-related risks can be identified, including a bank using the technology to make credit decisions for lending or insurers making decisions on underwriting for customers using AI-based systems, Henderson notes.

Key points for compliance teams

Staff working in compliance departments at various companies may find they need to familiarise themselves with the EU AI Act. Bryony Long, partner and co-head of data, privacy and cyber at Lewis Silkin told KYC360. Compliance workers need to first check which of their firm’s systems are AI-based. If there’s found to be any, they then need to work out which of the law’s four categories they fall under – unacceptable risk, high risk, limited risk and minimal or no risk level.

“Once this has been done, they then need to assess how their current duties will be affected by these new risks the company now has to deal with – for example, will the compliance officer need to spend more time on risk assessments in general, do they need to get training on some basic AI courses, and so on,” Long said. “Generally, a massive legislative development like the EU AI Act will need companies to spend funds on internal AI audits, which involve investigating how the law affects them, if at all, and how they can ensure they are in step with the rules,” she said. She added that, providers of AI-based systems who have improved on processes supplied to them by external developers may suddenly find that they too are classified as system developers under the rules – and hence may have more obligations.

The AI Act sets out a number of definitions, including an AI system as a machine-based system that is designed to operate with varying levels of autonomy. Risk is defined as a “combination of the probability of an occurrence of harm and the severity of that harm” under the Act, while “providers” includes developers of AI systems. The EU AI Act is an important source of obligations for regulators to enforce and AML staff should ensure they properly understand how AI at their companies works and that it is implemented properly in the business, according to Goodwin Law’s Henderson. Firms could also hire third-party consultants to cover the implementation of AI internally, he added.

Conclusion

Overall, AI offers companies benefits such as speed and business efficiency, but it can also present potential harms against customers in key sectors, including financial services.

This is where regulations such as the EU AI Act come in – the rules aim to bolster innovation at companies but also address various risks, with threats of potentially large fines for those who fail to comply. Compliance officers will need to map their AI systems, classify them under the Act’s risk tiers and prepare governance processes to remain compliant while realising AI’s benefits. The KYC360 Academy course, "Harnessing AI for Financial Crime Prevention: Board Oversight and Strategic Implementation" examines common AI applications, best practices, regulatory considerations and a review of industry case studies to focus on lessons to be learned.

This article is for general information purposes and should not be regarded as advice.

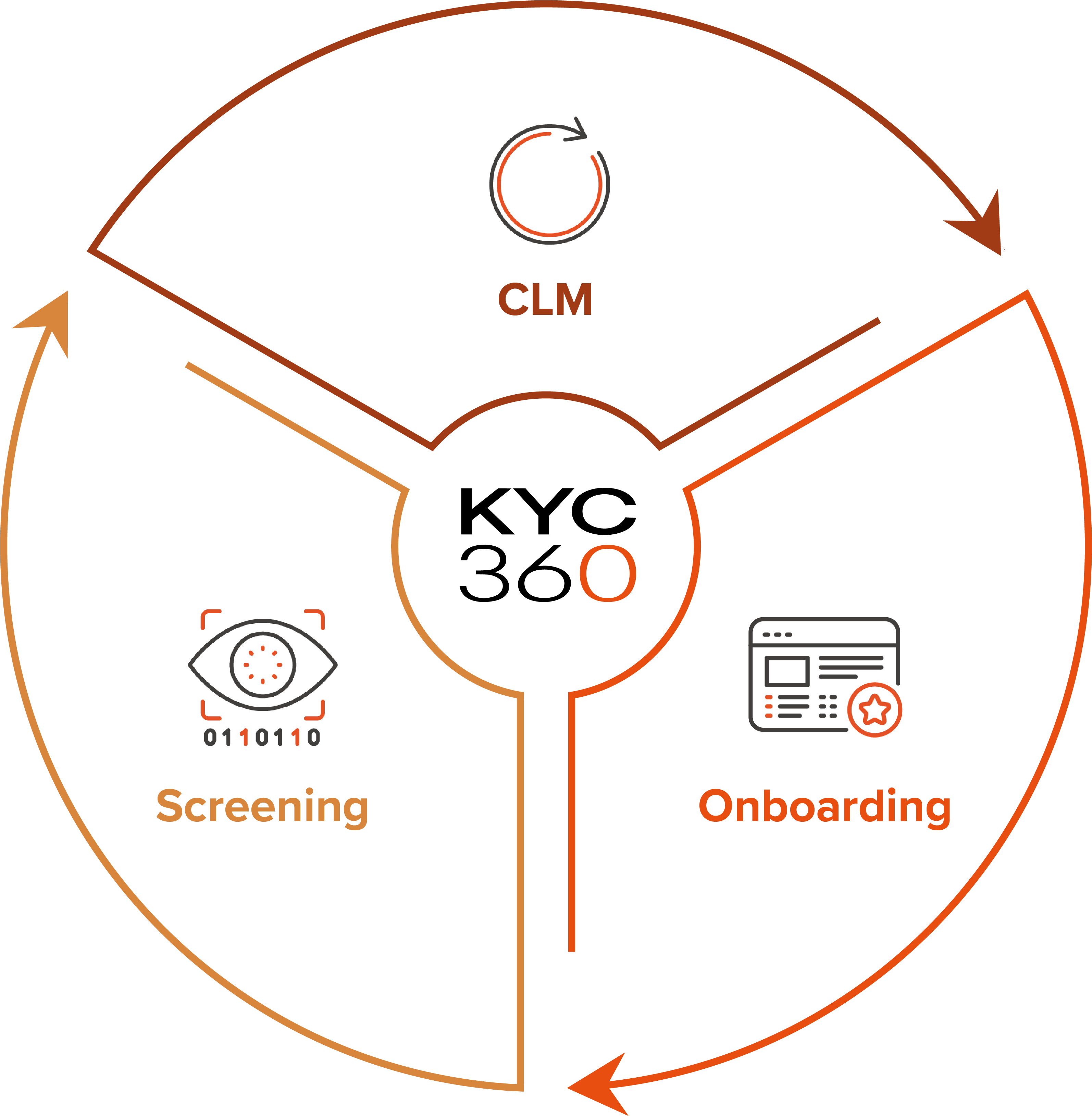

The KYC360 platform is an end-to-end solution offering slicker business processes with a streamlined, automated approach to Know Your Customer (KYC) compliance. This enables our customers to outperform commercially through operational efficiency gains whilst delivering improved customer experience and KYC data quality.