AI Regulation in the EU: The New Act Forges Ahead

By Irene Madongo

The European Union is moving forward with its regulation of artificial intelligence, as the next phase of its new rules, which cover obligations for general-purpose AI, come into force. The deadline has also passed for member states to have designated local authorities to oversee the implementation of the measures. What’s the law about and which are some of its main aspects?

The EU AI Act came into force on 1 August 2024 and is considered to be the first comprehensive AI law globally, a regulatory tool for Europe’s fast-moving technology environment. With this new legislation, European lawmakers were keen for rules that ensure AI systems in the bloc are transparent, safe and non-discriminatory, and for a uniform definition for the technology. The AI Act seeks to encompass these ambitions, including in its definition of an AI system as a machine-based system that is designed to operate with varying levels of autonomy. Such a system may infer, from input it gets, how to generate predictions and recommendations that can influence physical or digital settings, according to the law. Meanwhile, risk is defined as a “combination of the probability of an occurrence of harm and the severity of that harm” under the Act, while “providers” includes developers of AI systems, and “deployers” are users of the systems, but this excludes personal non-professional activity.

A risk-based approach to EU AI regulations

The legislation has a risk-based approach when it comes to AI regulation in the EU, setting out four levels of risks – the highest being “unacceptable risk,” which bans AI systems that are deemed to be a threat to people’s rights, livelihoods and safety. This includes practices such as social scoring and harmful AI-based manipulation.

The next level is the “high risk” category, which covers serious risks to health, safety or fundamental rights. This includes AI-based components of products such as robot-assisted surgery, or AI tools for employment like CV sorting software. The third class is the “limited risk” level which covers risks linked to transparency matters in AI. For example, people should be made aware that they are interacting with a machine when using chatbots, and AI-generated content should be clearly labelled, including deep fakes. Lastly, there’s the “minimal or no risk” level that covers systems such as AI-enabled video games – while the Act classifies these, it does not introduce rules for the category. The EU Commission says many AI systems presently used in the bloc come under this category.

Bryony Long, a technology and AI lawyer at Lewis Silkin, told KYC360 she believes that “the EU has classified the risks associated with AI in a reasonable way – the items that are high risk, such as use of AI in workplace, do fit in this category because in most cases they will have significant impact on individuals.” “However there are carve outs when systems are for more procedural or narrow tasks or otherwise assist in decision making which means not every workplace system will be high risk,” said Long, who is also co-head of data, privacy and cyber at the law firm.

Key aspects of the new EU AI regulations

The obligations for general-purpose AI models applies from 2 August 2025, which is the same deadline for EU countries to designate authorities to oversee the application of the rules for AI systems. The AI Act is enforced through national competent authorities (NCAs) in member states that will oversee the rules for AI systems.

The legislation is also enforced through the AI Office, the European Commission's implementing unit for the legislation at EU level, which will supervise general-purpose AI models. The AI Office can request information from model providers and apply sanctions. “There’s a question of the adequacy of the national competent authorities undertaking oversight of AI in their local jurisdictions,” Long said. “Of course, EU countries have some capable NCAs or local regulators, but AI is a new type of law because it’s very technical – and these supervisors will likely need to hire technology experts to help them steer through and uphold the rules,” she said. The AI Office will also provide guidance to the European Artificial Intelligence Board (AI Board), which is made up of representatives from member states.

The AI Board’s tasks include issuing advice and helping coordinate NCAs responsible for enforcing the rules. The board is one of three advisory bodies under the Act – the other two being the Scientific Panel and the Advisory Forum. The law also enables the creation of regulatory sandboxes, a move expected to help foster innovation. It also permits real world testing of AI systems deemed to be high-risk.

Penalties

The AI Act applies to organisations within and outside the EU whose AI system is in the Union market, or if it impacts people in the bloc. But some activities such as research that happen before an AI system is launched on the market are exempt from the regulations. Countries will need to lay down penalties for failure to comply with the EU AI regulations for AI systems. The Act sets out thresholds such as a penalty of up to €35 million or 7% of the total global annual turnover of the preceding financial year – or whichever is higher – for issues such as non-compliance that are linked to data matters. The threshold will be the lower of the two amounts for small and mid-sized companies for infringements. Companies, such as providers of general-purpose AI models, can face fines of up to €7.5 million or 1.5% of their total global yearly turnover of the preceding financial year for submitting incorrect information to NCAs in response to a request. EU institutions and agencies will also be subject to the rules – and may be hit with penalties by the European Data Protection Supervisor if they fail to comply.

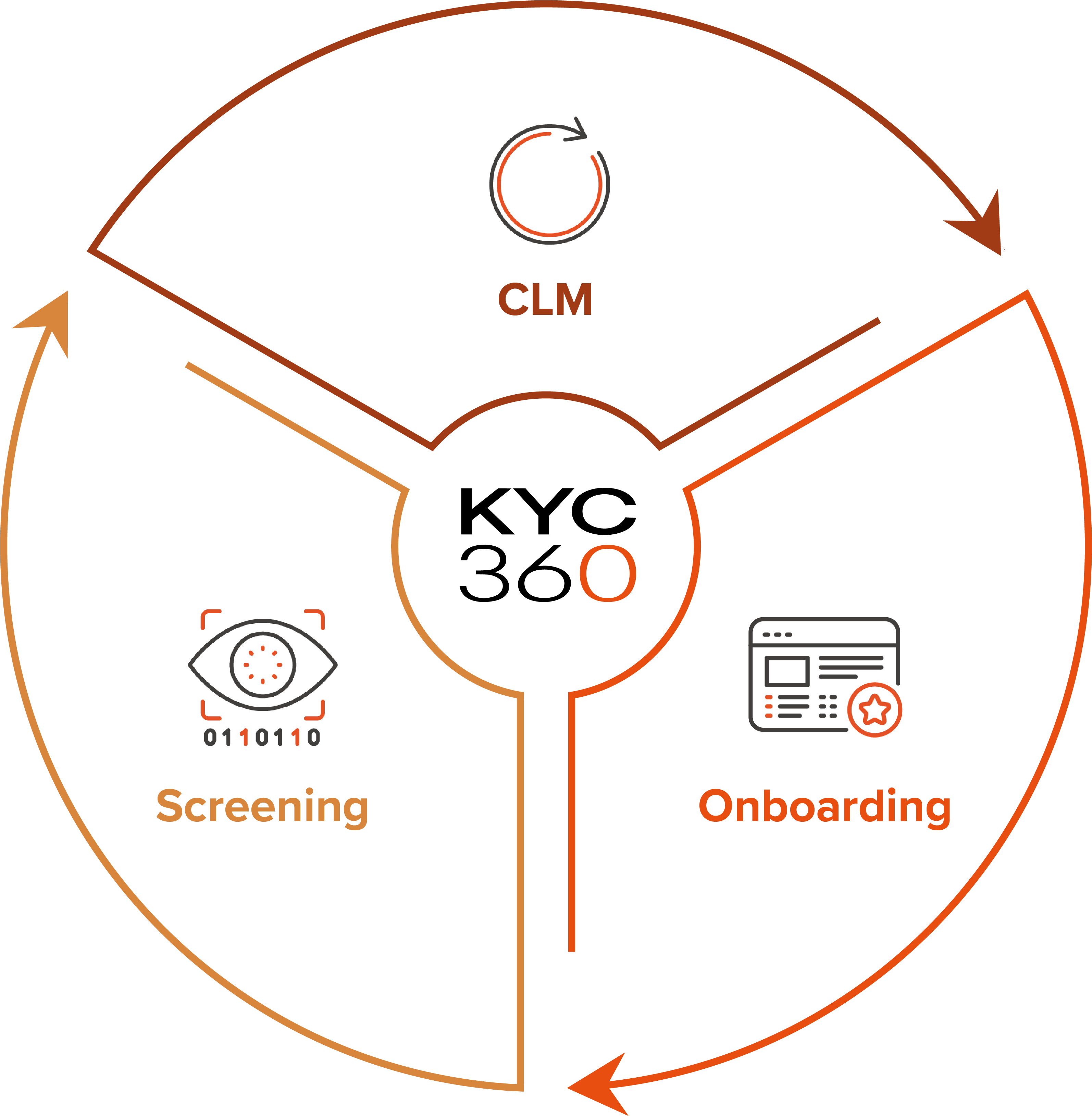

The KYC360 platform is an end-to-end solution offering slicker business processes with a streamlined, automated approach to Know Your Customer (KYC) compliance. This enables our customers to outperform commercially through operational efficiency gains whilst delivering improved customer experience and KYC data quality.