How is AI being regulated? The UK’s stance and main areas to watch

By Irene Madongo

Artificial Intelligence has wowed companies globally and, as you’d expect, caught the regulatory interest of governments, including Britain. But how is AI being regulated in the UK? The UK is keen to encourage the rollout of the technology but also wants to stay alert to its potential harms, making it an interesting scene for compliance departments to watch. Questions around how AI is being regulated have emerged as key areas for regulators and firms in various sectors in Britain, including finance, insurance, pensions, and investment. In January 2025, the government said an average of £200 million in private sector investment has been funnelled into Britain’s AI sector daily since Labour took office in 2024, or over £8.3 million per hour on average.

How is AI regulated in the UK? Exploring Britain’s current stance

So far, the UK, a leading European technology hub, has taken on a pro-innovation approach towards AI. Tim Hickman, partner at White & Case LLP, told KYC360 that Britain wants to avoid being too prescriptive. A pro-innovation approach appears “sensible at this stage,” but it’s too soon to tell how well that approach will work, Hickman said, “because of the nature of technology – AI is a younger and less predictable industry compared to, say, financial services.” “Generally, it’s hard to predict the success of the pro-innovative approach as it involves risks which are not yet known,” Hickman said.

“Also, while the UK’s sector-specific regulators are experienced in their respective fields, they are each having to adapt to the constantly evolving challenges introduced by AI,” he said. The pro-innovation stance doesn’t mean there is currently no regulatory system in place, though. Currently, some of Britain’s established regulators address AI matters pertaining to their sectors, including the Competition and Markets Authority and Information Commissioner’s Office, as well as the Financial Conduct Authority, which said in April 2024 that it’s focused on how companies can safely adopt AI, as well as understanding what effects its innovations are having on consumers and markets. “This includes close scrutiny of the systems and processes firms have in place to ensure our regulatory expectations are met,” the watchdog said.

Nathalie Moreno, AI and data partner at law firm Kennedys, told KYC360 that extending and adapting existing legal and regulatory frameworks to govern AI use is not a comprehensive overhaul, but an incremental strategy. She believes that this approach brings challenges, and that a key issue is regulatory fragmentation. “Different regulators may apply inconsistent standards to similar AI systems, depending on sectoral context,” Moreno said. “Startups and cross-sector businesses may also risk falling through regulatory gaps, given the absence of a central enforcement authority for AI risks,” she said.

Key UK AI regulation developments: laws to watch

There’s been some interesting developments on how AI is being regulated in the UK, including the AI Regulation white paper, which was published under the previous Conservative government in March 2023, and has been updated since. The white paper set out five principles for regulators to apply: – safety, security and robustness; appropriate transparency and explainability; fairness; accountability and governance; and contestability and redress.

Meanwhile, on the legislative front, in the July 2024 Kings Speech, the government said it’s aiming to have “appropriate legislation” for requirements for those working to develop “the most powerful artificial intelligence models.” On 19 June 2025, the Data Protection (Use and Access) Act 2025 – commonly known as the DUA Act, became law. A notable provision under DUA is its limited authorisation of automated decision-making, Moreno said. Article 22 of the UK General Data Protection Regulation (GDPR) generally bans decisions based only on automated processing that produce legal or similarly significant effects on people, but “the DUA Act permits such decisions under defined statutory conditions.” Moreno said. But where special category data – such as health, race, or trade union membership – is involved, “automated decisions will continue to be prohibited unless a specific legal basis exists, such as explicit consent or a substantial public interest condition” under the Data Protection Act 2018, she said.

Other interesting moves on the UK scene include the reintroduction of the Artificial Intelligence (Regulation) Bill in March 2025 in the House of Lords. The bill, put forward by Lord Holmes, proposes the creation of an AI Authority that must regard the principles that regulating the technology should deliver, which include safety, transparency, fairness, accountability and redress. The authority would also support sandbox initiatives and accredit independent AI auditors. The bill also proposes that companies that develop or use the technology have a designated AI officer who will be tasked with ensuring that it’s used safely and non-discriminatorily in the business, and that data used in the tech is unbiased. The draft law also covers areas such as transparency and intellectual property, proposing that those involved in training of the technology submit to the AI authority a record of third-party data and IP used in that training. “While it appears that there is at least some political will to regulate AI in the UK, this Bill may not get the backing necessary for it to eventually become law,” Hickman said. The bill sets out high-level principles regarding AI regulation, but “several of its proposed requirements are not sufficiently granular to be enforceable. For example, the Bill calls for fairness in AI, however it does not specify how that obligation is to be measured,” he said

Moving ahead with AI regulation

Separately, the government is working toward a future UK AI Bill, expected to set out matters such as statutory rules for high-risk AI and safeguards for the deployment of the technology, Kennedys’ Moreno notes. “There is growing recognition that any credible UK law must address general-purpose models, algorithmic accountability, transparency standards, and issues such as copyright infringement in training datasets,” she said. “Yet, policy tension remains. The UK government is clearly mindful of maintaining an attractive regulatory environment for global AI developers, particularly large US-based firms,” she said. “There is concern that overly prescriptive rules could be seen as trade barriers, stifling investment or innovation. The challenge for policymakers is to strike a balance: ensuring legal certainty and safety without compromising the UK’s ambition to be a global leader in AI innovation,” she said.

In January this year, the government published its AI Opportunities Action Plan, saying its present pro-innovation approach to regulation is “a source of strength relative to other more regulated jurisdictions and we should be careful to preserve this.” The government noted that regulation that is well designed can help enhance the safe development of AI, but warned that, if ineffective, it could hold back adoption of the technology in crucial sectors, like medicine. As AI regulation in the UK progresses, “it will be important for Parliament to make it clear precisely what obligations businesses are expected to satisfy, and how compliance with those obligations will be measured, White & Case’s Hickman said.

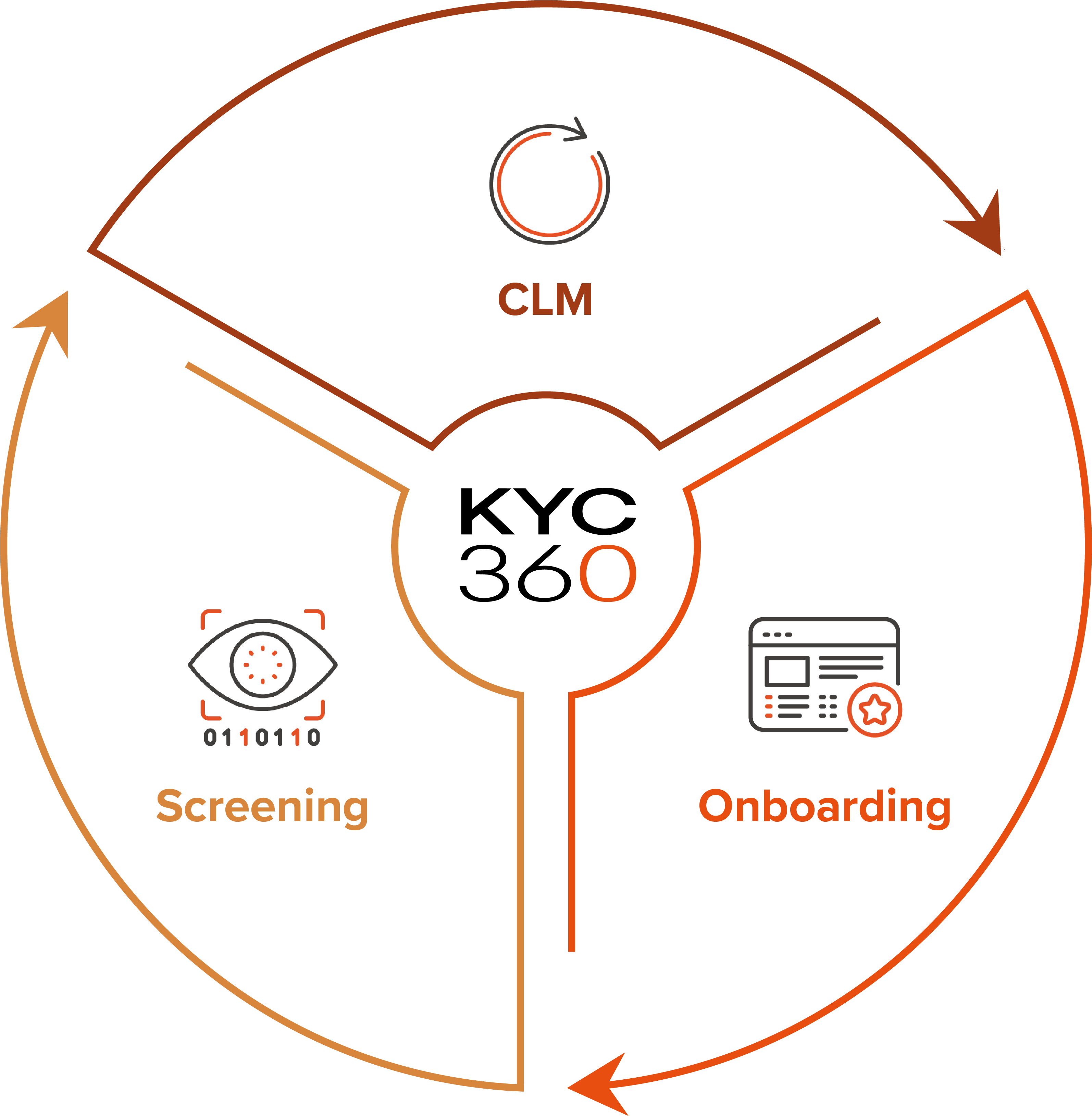

The KYC360 platform is an end-to-end solution offering slicker business processes with a streamlined, automated approach to Know Your Customer (KYC) compliance. This enables our customers to outperform commercially through operational efficiency gains whilst delivering improved customer experience and KYC data quality.